I have 1,500 rows I need to shove into a table. I don’t have access to the database, directly.

But my DBA is happy to give me a HTTPS entry point to my data.

What do I do?

Let’s look at a Low-Code solution:

Oracle REST Data Services & Auto REST for tables.

With this feature, you can say for a table, make a REST API available for:

- querying the table

- inserting a row

- updating a row

- deleting a row

- getting the metadata for a table (DESC)

- bulk load the table

Now I’ve previously shown how to INSERT a record to a table with ORDS via POST.

But that’s just one row at a time.

Let’s do it for 1500 rows. And I don’t have the data in JSON. No, my data nerd has given me a CSV ‘dump.’

How do I get it in?

If you want to consult the ORDS Docs, this is what we’re going to be using (DOCS).

For the POST to be received happily by ORDS, we ASSUME:

- the URI is avail, as the table has been REST enabled

- the first rows will be the column names

- the rest of the rows are your data

You have lots of options you can pass as parameters on the call. See the DOCS link above.

Ok, let’s do it.

Build Your Table

I’m going to run this code in SQL Developer.

CREATE TABLE stuff AS SELECT OWNER, object_name, object_id, object_type FROM all_objects WHERE 1=2; CLEAR SCREEN SELECT /*csv*/ OWNER, object_name, object_id, object_type FROM all_objects fetch FIRST 1500 ROWS ONLY;

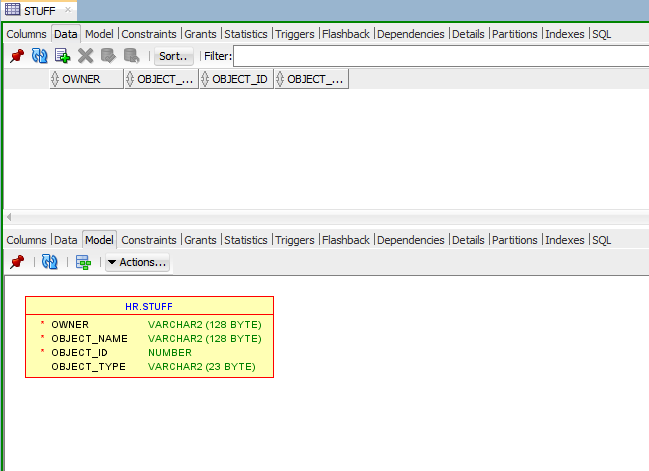

That spits out a new table:

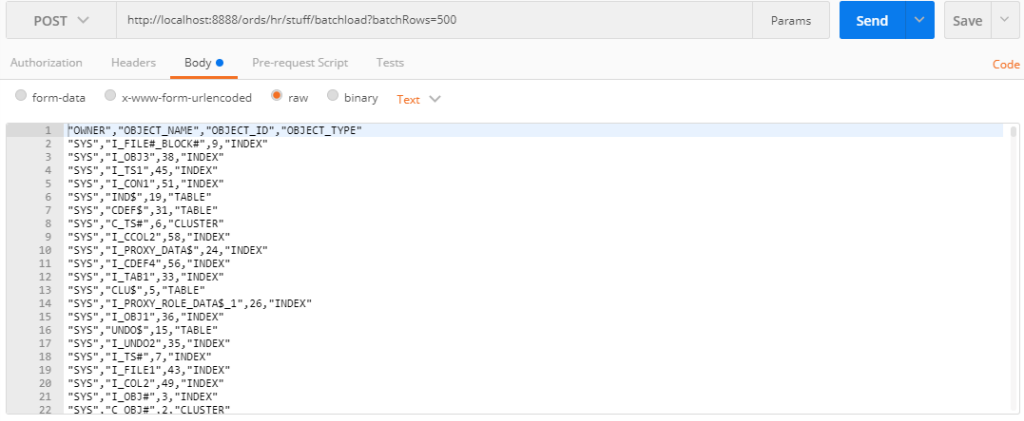

…and some CSV that looks like this:

"OWNER","OBJECT_NAME","OBJECT_ID","OBJECT_TYPE" "SYS","I_FILE#_BLOCK#",9,"INDEX" "SYS","I_OBJ3",38,"INDEX" "SYS","I_TS1",45,"INDEX" "SYS","I_CON1",51,"INDEX" "SYS","IND$",19,"TABLE" "SYS","CDEF$",31,"TABLE" "SYS","C_TS#",6,"CLUSTER" "SYS","I_CCOL2",58,"INDEX" "SYS","I_PROXY_DATA$",24,"INDEX" "SYS","I_CDEF4",56,"INDEX" "SYS","I_TAB1",33,"INDEX" "SYS","CLU$",5,"TABLE" "SYS","I_PROXY_ROLE_DATA$_1",26,"INDEX" ...

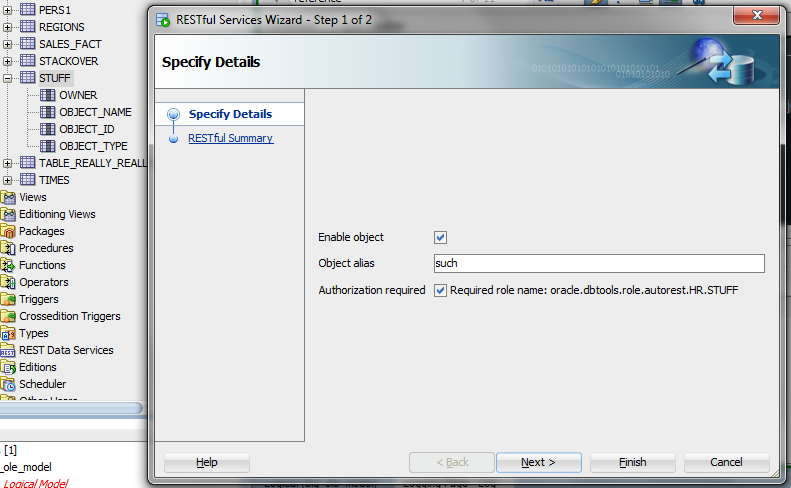

REST Enable the Table

You’ve already got ORDS going. You’ve already got your schema REST enabled, now you just need to do this bit to get your GET, POST, PUT, & DELETE HTTPS methods available for the Auto Table bits.

Now we can make the call.

We POST to the endpoint, it’s going to follow this structure:

/ords/schema/table/batchload

The CSV will go in the POST body.

POST /ords/hr/stuff/batchload?batchRows=500 I’m asking ORDS to do inserts in batches of 500 records – less commits than say, batches of 25 records

The CURL would look like this:

curl -X POST \ 'http://localhost:8888/ords/hr/stuff/batchload?batchRows=500' \ -H 'cache-control: no-cache' \ -H 'postman-token: 1eb3f365-f83d-c423-176d-7e8cd08c3eab' \ -d '"OWNER","OBJECT_NAME","OBJECT_ID","OBJECT_TYPE" "SYS","I_FILE#_BLOCK#",9,"INDEX" "SYS","I_OBJ3",38,"INDEX" "SYS","I_TS1",45,"INDEX" "SYS","I_CON1",51,"INDEX" "SYS","IND$",19,"TABLE" "SYS","CDEF$",31,"TABLE" "SYS","C_TS#",6,"CLUSTER" "SYS","I_CCOL2",58,"INDEX" "SYS","I_PROXY_DATA$",24,"INDEX" "SYS","I_CDEF4",56,"INDEX" "SYS","I_TAB1",33,"INDEX" "SYS","CLU$",5,"TABLE" "SYS","I_PROXY_ROLE_DATA$_1",26,"INDEX" ...

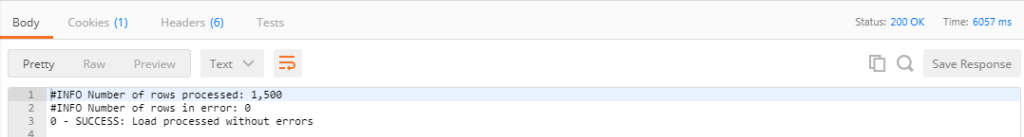

And the results…about 6 seconds later.

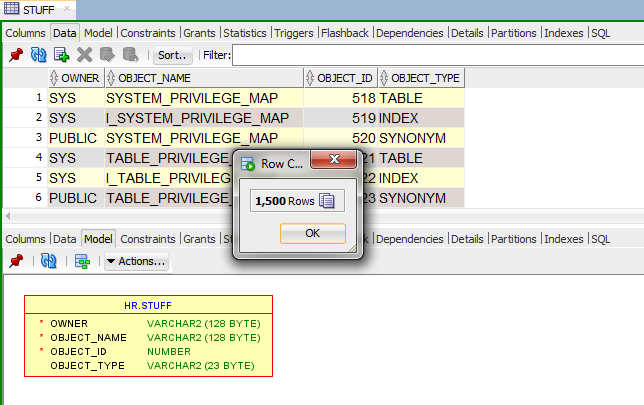

And just because I like to double check…

The first time I tried this, it was with ?batchRows=100, so 15 COMMITs for the load, and it took 12 seconds. So I cut the time in half by doing baches of 500 rows at a time. You’ll want to experiment for yourself to find an acceptable outcome.

Trivia, caveats, etc.

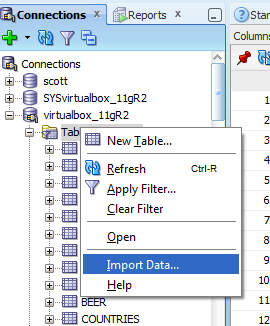

The ORDS code that takes the CSV in and INSERTs it to the table is the SAME code SQL Developer uses here:

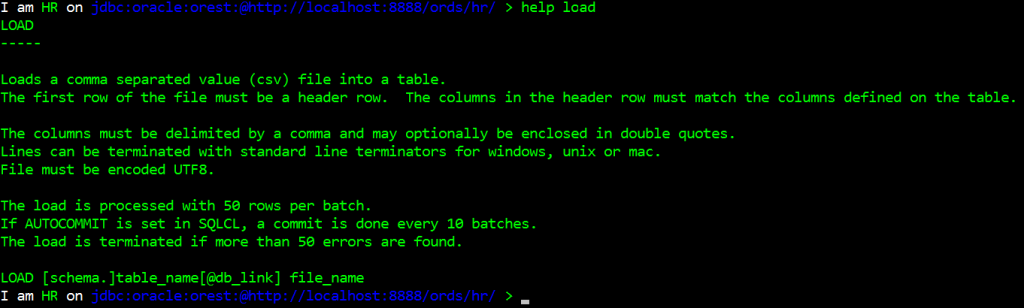

And it’s the same code SQLcl users here:

This is not the ideal way to load a LOT of data.

ORDS employs INSERT statements to insert your data using the AUTO route. An external table and CTAS will always be faster. And of course you have SQL*Loader and DataPump. But those require database access. This does NOT.

Or, you could always roll your own code and build your own RESTful Service. And perhaps you should. But if quick and dirty are good enough for you, we won’t tell anyone.